Creating realistic video content using AI

A revolution in content creation, or the job thief of the future ?

by Peter Hicks

22.03.2024

We at dmcgroup started an experiment and wanted to test the limits of artificial intelligence. In 2023, we held a workshop in Düsseldorf to find out how we could harness the power of AI for our company and merge it with our creativity. We want to improve our capabilities and create exceptional solutions for our customers. We returned to Vienna with great fascination and started a new AI project full of zest for action: the merger of our locations has been a central topic for us over the past few months. To illustrate this merging of locations, we wanted to create a video with the support of AI.

And the journey begins

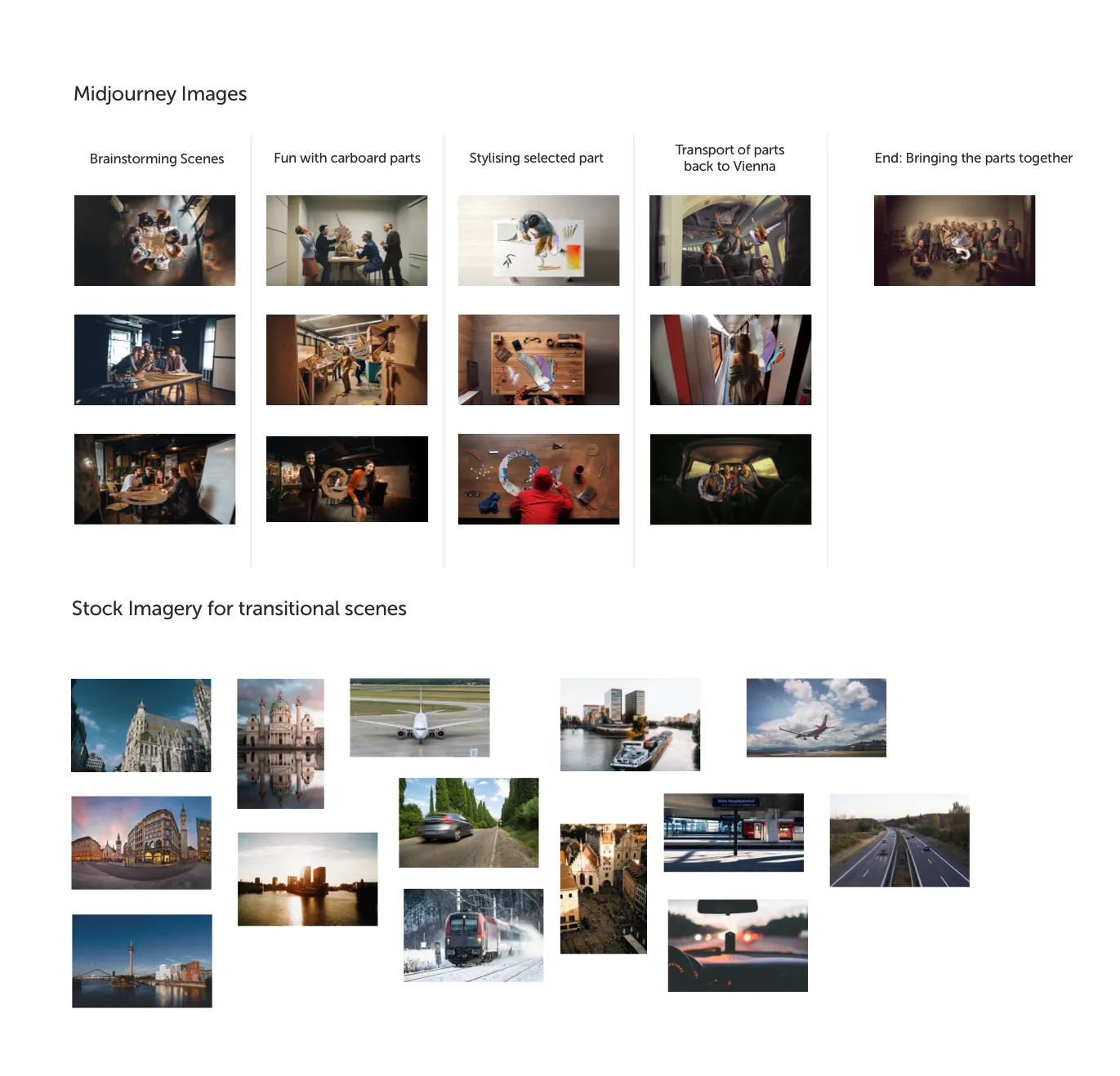

I started by creating a storyboard, which served as the basis for the video. As mentioned above, the fusion of the dmcgroup locations should be the focus of the story. At the beginning, each dmcgroup location designs a specific body part of the dmcgroup Beast. Accompanied by the Beatles song “Coming Together”, each location will then make its way back to the dmcgroup headquarters in Vienna to present its work of art. In a dramatic transformation, these parts will then come together to create the dmcgroup logo.

This was originally intended to be a video project that I would present at the Christmas party. Each location should document the design of the body parts and the journey to Vienna itself. The grand finale should then take place at the party, where all parts of the Beast are brought together.

However, this has provided a unique opportunity to test the limits of innovation. I took on the challenge of having the storyboards written solely by artificial intelligence.

Prompts, prompts and more prompts

The magic of generative AI lies in the power of promptness. You “summon” the AI genie with your wishes, rub the lamp and several options appear and all wishes are fulfilled. This method only proves to be effective with AI if the idea you have is only vague. However, if it’s something very specific – such as a pencil sketch of a particular scene – the process becomes more complicated, costly and probably annoying.

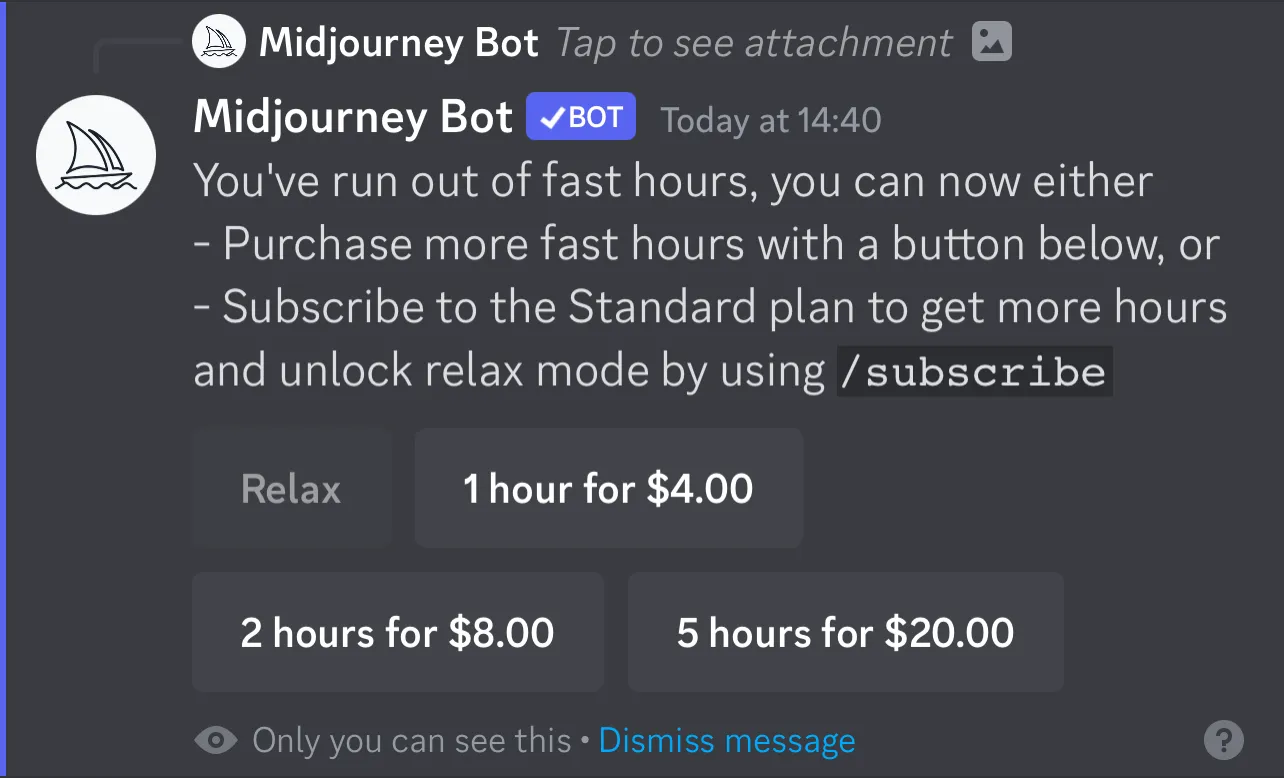

At the forefront of AI imaging is Midjourney, a leading platform known for its seemingly groundbreaking features. Through the chat application Discord, users prompt Midjourney and receive hyper-realistic imagery in return. Behind the curtain, the allocation of processing time and queue limits depend on the user’s subscription

Each prompt chips away at your allotted processing time. “Show me an image of a frog!” That’s CPU time. “Now make the frog green.” More CPU time. “Give the green frog black spots.” You guessed it — more CPU time!

The trail and error method of generative AI is all well and good until you don’t have that much time available.

If you have a very specific vision of a visual in mind, you are often not satisfied with vague results. You get into a wheel where you re-prompt certain elements, keep what you like and refine the areas that need adjusting. Yet, there are instances where Midjourney seems to miss the mark entirely. Midjourney has become known for its misinterpretation of hands. Occasionally it adds one, two or more components, which often leads to results that can be quite disconcerting.

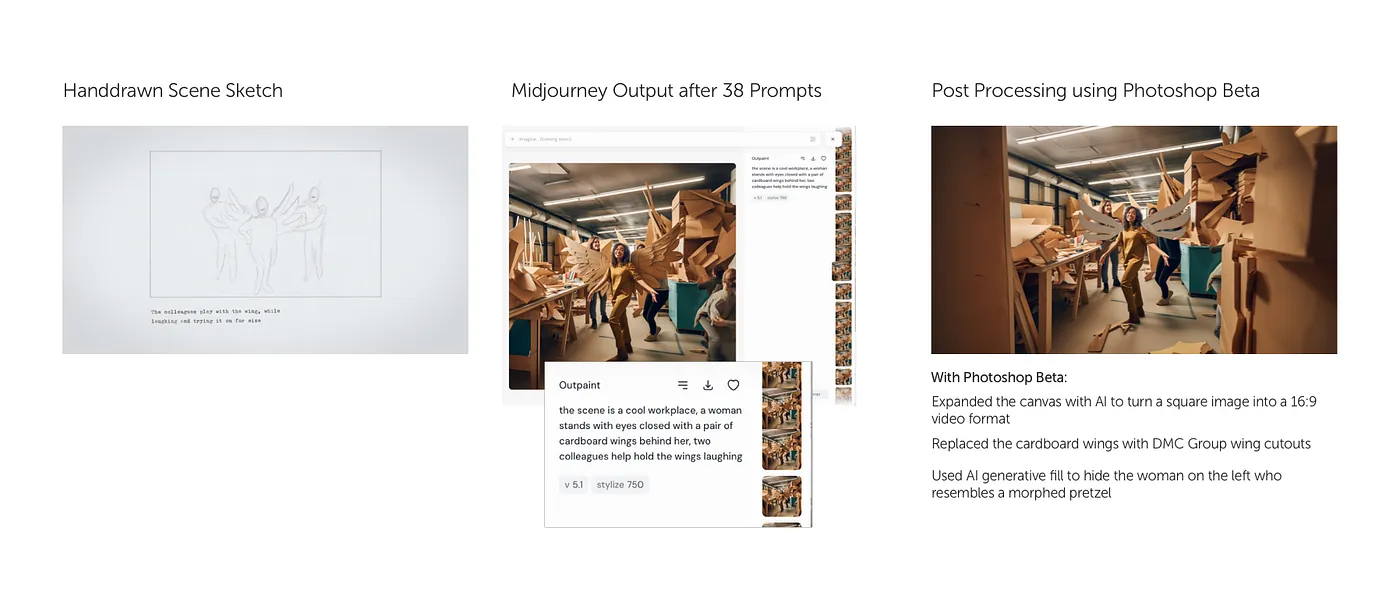

After struggling with the failures of Midjourney and not wanting to be stuck in an endless wheel of prompting, I decided to take matters into my own hands, quite literally. I created the generated image in Photoshop Beta and tried to refine and perfect the details.

“Please generate a normal-looking hand.”

In the end, I devised a hybrid approach: utilising Midjourney to get close to my desired result and then fine-tuning any problematic areas with Photoshop’s AI. The outcome? Images that closely resembled the storyboard slides I had sketched out.

Learn

more

We are more than just experts – our dedicated team of designers, developers and marketing specialists work hand in hand to take your digital presence to the next level.

Ready for the next step?

Contact us and find out more about our services.

Animating Static Imagery: Bringing them to life with Runway

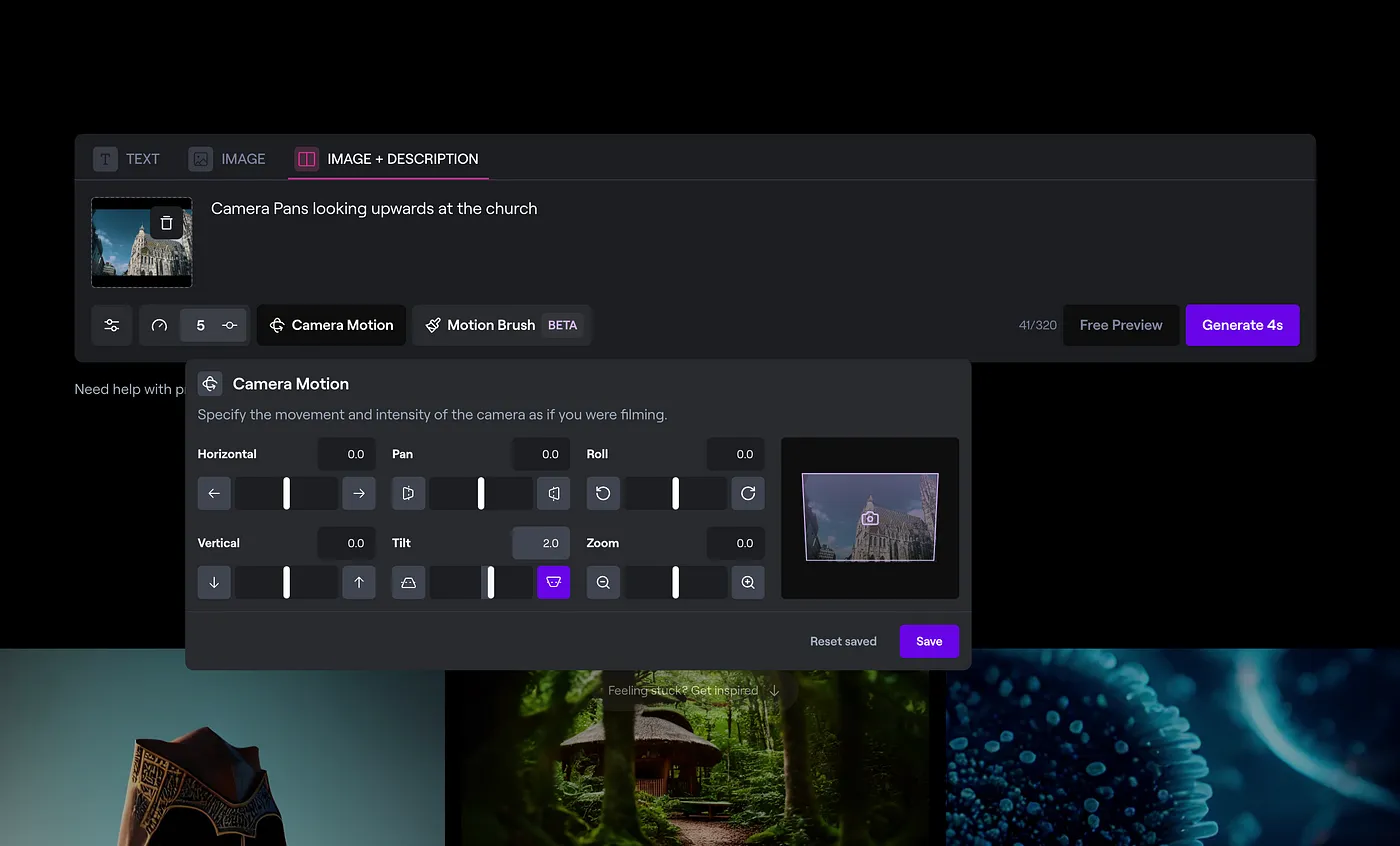

After fine-tuning my AI-generated images, I next wanted to test the AI’s ability to animate static images. One of the best-known AI tools designed to facilitate such processes is Runway.

Using Runway, I could upload my static scenes and entrust AI to infuse them with motion. I had the option to either grant AI full creative control or provide specific instructions for desired outcomes. Additionally, Runway allows users to dictate camera movements, such as panning and zooming, within the scenes.

Similar to Midjourney, using prompts also necessitates GPU resources. This depends on the subscription, if you exceed the monthly quota, additional credits must be purchased.

Initially, I allowed Runway autonomously interpret and animate the imagery. At the time of writing the output from Runway can be somewhat inconsistent. For simple scenes (e.g. a building against a sky background), Runway does an excellent job of animating clouds and sunlight and smoothly panning and zooming the camera, creating a realistic cinematic effect.

However, when I tried to give specific instructions, I faced similar challenges that I encountered with Midjourney. Either the software failed to grasp my requests, or perhaps I was simply not asking the right questions. Nevertheless this resulted in some scenes showing unnatural human movements or faces morphing into each other like something from a scifi horror movie.

A good example that illustrates some of these challenges was trying to create a scene on the highway that would show my dmcgroup colleagues driving their cars. Despite my best efforts, I could not get Midjourney to generate a static overall view of a two-lane highway. I then opted for a royalty-free image from Unsplash.

I had hoped that Runway would boost my confidence in AI again and that it would breathe life into the image. I assumed that this would be a relatively easy task for the AI. To my amazement, the AI struggled with the concept of cars moving in opposite directions. I tried to instruct the AI accordingly: “The cars on the right side of the image are moving away from the camera, and the cars on the left side of the image are moving towards the camera.” Regardless of my efforts, both groups of cars either moved towards or away from the camera, resulting in the surreal sight of cars moving backwards on a highway.

Unlike with the static imagery, there isn’t much flexibility in post-processing the video output from Runway once it’s been generated. For instance, in the previous example with Midjourney’s hand depictions, it’s relatively straightforward to use Photoshop’s AI and correct the errors.

However, since the video output is not in HD quality, the correction is much more difficult. For instance, to edit a video with a seven fingered hand, one would need to either painstakingly correct the hand in every frame and hope for a seamless playback, or substitute it with a static hand and animate and morph it as much as possible with the movement of the original composition.

Regardless of the method chosen, it would still require a considerable amount of effort, contradicting the original purpose of using AI to simplify my work.

Summary

The end result is passable. Lurking beneath the surface are a myriad of glitches and discrepancies that betray its artificial origins. For those in the know, these quirks are part and parcel of the AI creative process. They understand the limitations, the algorithms at work, and the painstaking effort required to push the boundaries of what’s possible.

For uninitiated viewers stumbling across AI-generated content for the first time, however, it’s a completely different story. When you see faces inexplicably melting into each other halfway through the video or people suddenly being swallowed up by the surrounding objects, a subtle sense of unease sets in. Something just doesn’t seem quite right.

In the field of AI-generated content, we are walking a tightrope between groundbreaking innovation and baffling imperfection – a fine line between creativity and confusion.

For the time being, our role as human “AI drivers” remains indispensable. We are the ones who have to navigate through this maze of confusion and bring creativity out of the algorithmic depths of AI.

One question remains unanswered: Is creating and optimizing prompts more tedious and error-prone than sticking to traditional methods of content creation, such as using stock images or homegrown content?

The facets of human communication are vast and complex and pose a major challenge for artificial intelligence, which is still struggling to understand exactly what we mean when we ask for something. As a result, we often repeat prompts ad nauseam, with minor word changes, hoping for different results, and so we are often at a loss when we are confronted again with results we already know.

While artificial intelligence is showing off its skills in creating eye-catching static images for presentations or website hero shots, the field of AI-generated videos is still in its infancy – an area whose potential has yet to be fully explored.

We help you to increase your efficiency with intelligent designs and technologies.

Stay

tuned

More articles